Quick Start with skdag¶

The following tutorial shows you how to write some simple directed acyclic graphs (DAGs)

with skdag.

Installation¶

Installing skdag is simple:

pip install skdag

Note that to visualise graphs you need to install the graphviz libraries too. See the pygraphviz documentation for installation guidance.

Creating a DAG¶

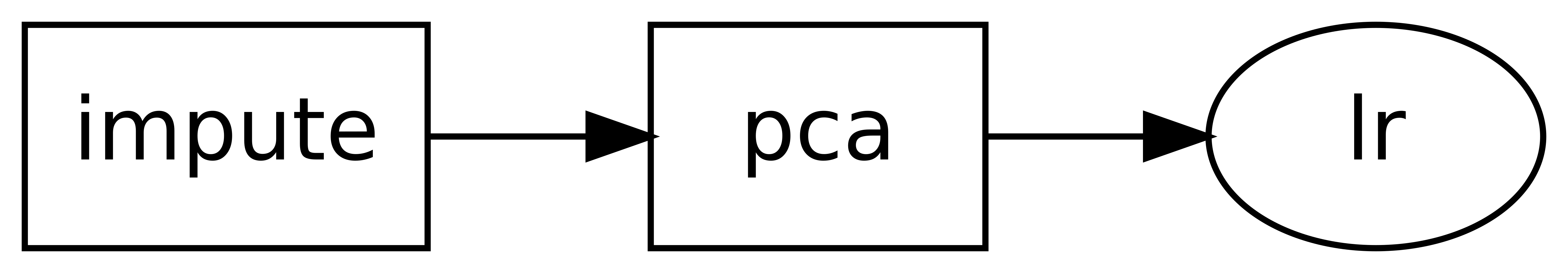

The simplest DAGs are just a chain of singular dependencies. These DAGs may be

created from the skdag.dag.DAG.from_pipeline() method in the same way as a

DAG:

>>> from skdag import DAGBuilder

>>> from sklearn.decomposition import PCA

>>> from sklearn.impute import SimpleImputer

>>> from sklearn.linear_model import LogisticRegression

>>> dag = DAGBuilder().from_pipeline(

... steps=[

... ("impute", SimpleImputer()),

... ("pca", PCA()),

... ("lr", LogisticRegression())

... ]

... ).make_dag()

>>> dag.show()

o impute

|

o pca

|

o lr

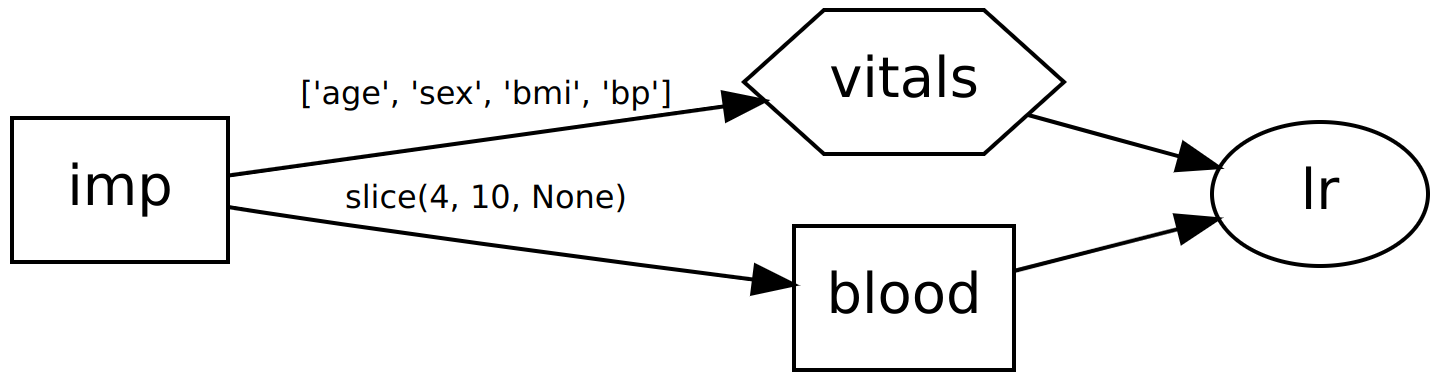

For more complex DAGs, it is recommended to use a skdag.dag.DAGBuilder,

which allows you to define the graph by specifying the dependencies of each new

estimator:

>>> dag = (

... DAGBuilder(infer_dataframe=True)

... .add_step("impute", SimpleImputer())

... .add_step("vitals", "passthrough", deps={"impute": ["age", "sex", "bmi", "bp"]})

... .add_step("blood", PCA(n_components=2, random_state=0), deps={"impute": slice(4, 10)})

... .add_step("lr", LogisticRegression(random_state=0), deps=["blood", "vitals"])

... .make_dag()

... )

>>> dag.show()

o impute

|\

o o blood,vitals

|/

o lr

In the above examples we pass the first four columns directly to a regressor, but the remaining columns have dimensionality reduction applied first before being passed to the same regressor as extra input columns.

In this DAG, as well as using the deps option to control which estimators feed in to

other estimators, but which columns are used (and ignored) by each step. For more detail

on how to control this behaviour, see the User Guide.

The DAG may now be used as an estimator in its own right:

>>> from sklearn import datasets

>>> X, y = datasets.load_diabetes(return_X_y=True, as_frame=True)

>>> type(dag.fit_predict(X, y))

<class 'pandas.core.series.Series'>

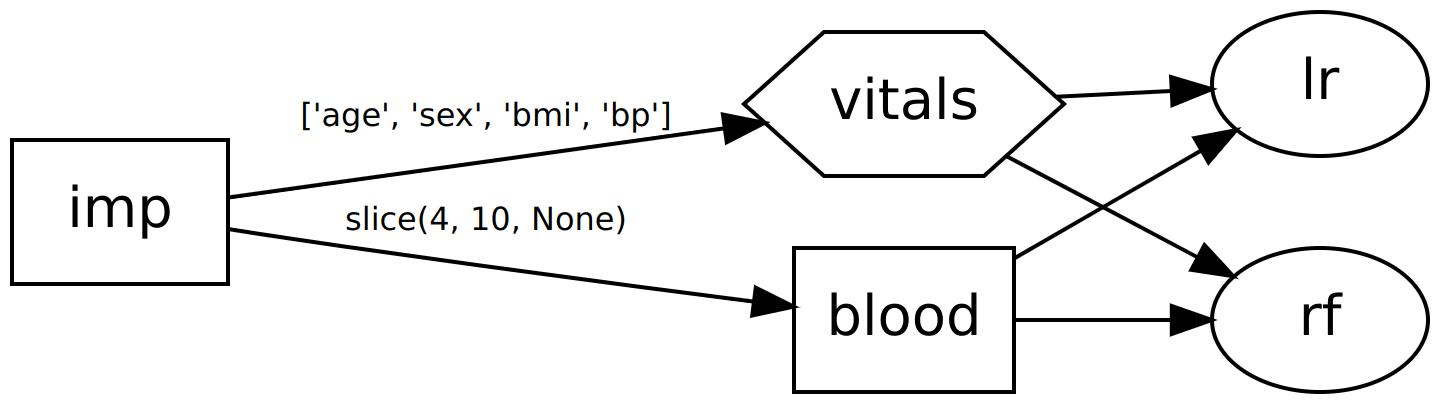

In an extension to the scikit-learn estimator interface, DAGs also support multiple inputs and multiple outputs. Let’s say we want to compare two different classifiers:

>>> from sklearn.ensemble import RandomForestClassifier

>>> cal = DAGBuilder(infer_dataframe=True).from_pipeline(

... [("rf", RandomForestClassifier(random_state=0))]

... ).make_dag()

>>> dag2 = dag.join(cal, edges=[("blood", "rf"), ("vitals", "rf")])

>>> dag2.show()

o impute

|\

o o blood,vitals

|x|

o o lr,rf

Now our DAG will return two outputs: one from each classifier. Multiple outputs are

returned as a sklearn.utils.Bunch:

>>> y_pred = dag2.fit_predict(X, y)

>>> type(y_pred.lr)

<class 'pandas.core.series.Series'>

>>> type(y_pred.rf)

<class 'pandas.core.series.Series'>

Similarly, multiple inputs are also acceptable and inputs can be provided by

specifying X and y as dict-like objects.